Skeleton-based Action Recognition Models¶

AGCN¶

Two-Stream Adaptive Graph Convolutional Networks for Skeleton-Based Action Recognition

Abstract¶

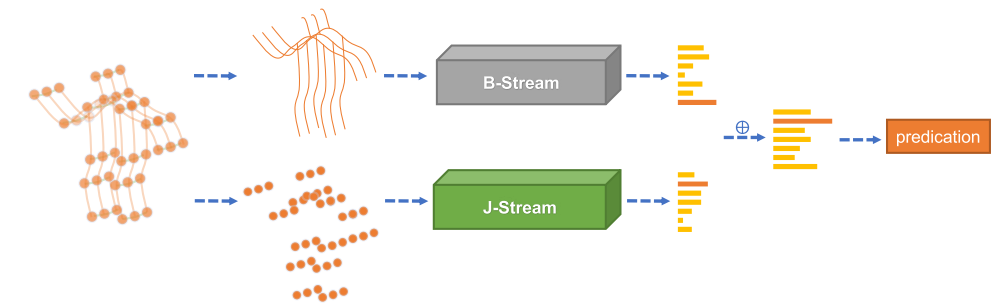

In skeleton-based action recognition, graph convolutional networks (GCNs), which model the human body skeletons as spatiotemporal graphs, have achieved remarkable performance. However, in existing GCN-based methods, the topology of the graph is set manually, and it is fixed over all layers and input samples. This may not be optimal for the hierarchical GCN and diverse samples in action recognition tasks. In addition, the second-order information (the lengths and directions of bones) of the skeleton data, which is naturally more informative and discriminative for action recognition, is rarely investigated in existing methods. In this work, we propose a novel two-stream adaptive graph convolutional network (2s-AGCN) for skeleton-based action recognition. The topology of the graph in our model can be either uniformly or individually learned by the BP algorithm in an end-to-end manner. This data-driven method increases the flexibility of the model for graph construction and brings more generality to adapt to various data samples. Moreover, a two-stream framework is proposed to model both the first-order and the second-order information simultaneously, which shows notable improvement for the recognition accuracy. Extensive experiments on the two large-scale datasets, NTU-RGBD and Kinetics-Skeleton, demonstrate that the performance of our model exceeds the state-of-the-art with a significant margin.

Results and Models¶

NTU60_XSub¶

| config | type | gpus | backbone | Top-1 | ckpt | log | json |

|---|---|---|---|---|---|---|---|

| 2sagcn_80e_ntu60_xsub_keypoint_3d | joint | 1 | AGCN | 86.06 | ckpt | log | json |

| 2sagcn_80e_ntu60_xsub_bone_3d | bone | 2 | AGCN | 86.89 | ckpt | log | json |

Train¶

You can use the following command to train a model.

python tools/train.py ${CONFIG_FILE} [optional arguments]

Example: train AGCN model on joint data of NTU60 dataset in a deterministic option with periodic validation.

python tools/train.py configs/skeleton/2s-agcn/2sagcn_80e_ntu60_xsub_keypoint_3d.py \

--work-dir work_dirs/2sagcn_80e_ntu60_xsub_keypoint_3d \

--validate --seed 0 --deterministic

Example: train AGCN model on bone data of NTU60 dataset in a deterministic option with periodic validation.

python tools/train.py configs/skeleton/2s-agcn/2sagcn_80e_ntu60_xsub_bone_3d.py \

--work-dir work_dirs/2sagcn_80e_ntu60_xsub_bone_3d \

--validate --seed 0 --deterministic

For more details, you can refer to Training setting part in getting_started.

Test¶

You can use the following command to test a model.

python tools/test.py ${CONFIG_FILE} ${CHECKPOINT_FILE} [optional arguments]

Example: test AGCN model on joint data of NTU60 dataset and dump the result to a pickle file.

python tools/test.py configs/skeleton/2s-agcn/2sagcn_80e_ntu60_xsub_keypoint_3d.py \

checkpoints/SOME_CHECKPOINT.pth --eval top_k_accuracy mean_class_accuracy \

--out joint_result.pkl

Example: test AGCN model on bone data of NTU60 dataset and dump the result to a pickle file.

python tools/test.py configs/skeleton/2s-agcn/2sagcn_80e_ntu60_xsub_bone_3d.py \

checkpoints/SOME_CHECKPOINT.pth --eval top_k_accuracy mean_class_accuracy \

--out bone_result.pkl

For more details, you can refer to Test a dataset part in getting_started.

Citation¶

@inproceedings{shi2019two,

title={Two-stream adaptive graph convolutional networks for skeleton-based action recognition},

author={Shi, Lei and Zhang, Yifan and Cheng, Jian and Lu, Hanqing},

booktitle={Proceedings of the IEEE/CVF conference on computer vision and pattern recognition},

pages={12026--12035},

year={2019}

}

PoseC3D¶

Revisiting Skeleton-based Action Recognition

Abstract¶

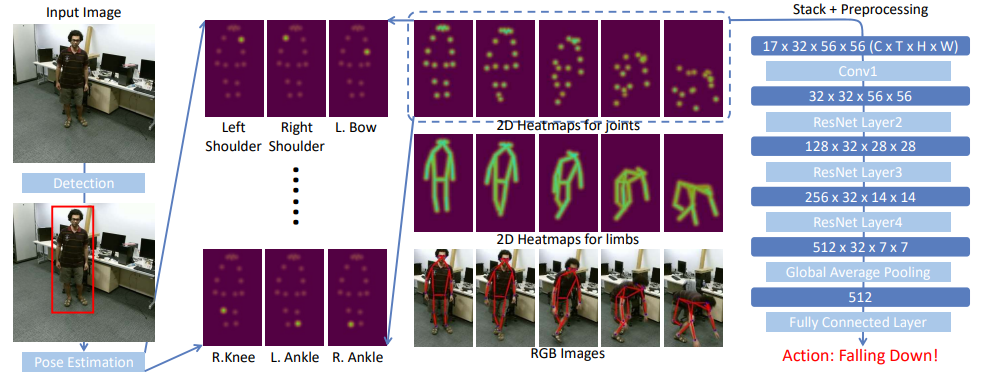

Human skeleton, as a compact representation of human action, has received increasing attention in recent years. Many skeleton-based action recognition methods adopt graph convolutional networks (GCN) to extract features on top of human skeletons. Despite the positive results shown in previous works, GCN-based methods are subject to limitations in robustness, interoperability, and scalability. In this work, we propose PoseC3D, a new approach to skeleton-based action recognition, which relies on a 3D heatmap stack instead of a graph sequence as the base representation of human skeletons. Compared to GCN-based methods, PoseC3D is more effective in learning spatiotemporal features, more robust against pose estimation noises, and generalizes better in cross-dataset settings. Also, PoseC3D can handle multiple-person scenarios without additional computation cost, and its features can be easily integrated with other modalities at early fusion stages, which provides a great design space to further boost the performance. On four challenging datasets, PoseC3D consistently obtains superior performance, when used alone on skeletons and in combination with the RGB modality.

|

Pose Estimation Results

|

Keypoint Heatmap Volume Visualization

|

Limb Heatmap Volume Visualization

|

Results and Models¶

FineGYM¶

| config | pseudo heatmap | gpus | backbone | Mean Top-1 | ckpt | log | json |

|---|---|---|---|---|---|---|---|

| slowonly_r50_u48_240e_gym_keypoint | keypoint | 8 x 2 | SlowOnly-R50 | 93.7 | ckpt | log | json |

| slowonly_r50_u48_240e_gym_limb | limb | 8 x 2 | SlowOnly-R50 | 94.0 | ckpt | log | json |

| Fusion | 94.3 |

NTU60_XSub¶

| config | pseudo heatmap | gpus | backbone | Top-1 | ckpt | log | json |

|---|---|---|---|---|---|---|---|

| slowonly_r50_u48_240e_ntu60_xsub_keypoint | keypoint | 8 x 2 | SlowOnly-R50 | 93.7 | ckpt | log | json |

| slowonly_r50_u48_240e_ntu60_xsub_limb | limb | 8 x 2 | SlowOnly-R50 | 93.4 | ckpt | log | json |

| Fusion | 94.1 |

NTU120_XSub¶

| config | pseudo heatmap | gpus | backbone | Top-1 | ckpt | log | json |

|---|---|---|---|---|---|---|---|

| slowonly_r50_u48_240e_ntu120_xsub_keypoint | keypoint | 8 x 2 | SlowOnly-R50 | 86.3 | ckpt | log | json |

| slowonly_r50_u48_240e_ntu120_xsub_limb | limb | 8 x 2 | SlowOnly-R50 | 85.7 | ckpt | log | json |

| Fusion | 86.9 |

UCF101¶

| config | pseudo heatmap | gpus | backbone | Top-1 | ckpt | log | json |

|---|---|---|---|---|---|---|---|

| slowonly_kinetics400_pretrained_r50_u48_120e_ucf101_split1_keypoint | keypoint | 8 | SlowOnly-R50 | 87.0 | ckpt | log | json |

HMDB51¶

| config | pseudo heatmap | gpus | backbone | Top-1 | ckpt | log | json |

|---|---|---|---|---|---|---|---|

| slowonly_kinetics400_pretrained_r50_u48_120e_hmdb51_split1_keypoint | keypoint | 8 | SlowOnly-R50 | 69.3 | ckpt | log | json |

Note

The gpus indicates the number of gpu we used to get the checkpoint. It is noteworthy that the configs we provide are used for 8 gpus as default. According to the Linear Scaling Rule, you may set the learning rate proportional to the batch size if you use different GPUs or videos per GPU, e.g., lr=0.01 for 8 GPUs x 8 videos/gpu and lr=0.04 for 16 GPUs x 16 videos/gpu.

You can follow the guide in Preparing Skeleton Dataset to obtain skeleton annotations used in the above configs.

Train¶

You can use the following command to train a model.

python tools/train.py ${CONFIG_FILE} [optional arguments]

Example: train PoseC3D model on FineGYM dataset in a deterministic option with periodic validation.

python tools/train.py configs/skeleton/posec3d/slowonly_r50_u48_240e_gym_keypoint.py \

--work-dir work_dirs/slowonly_r50_u48_240e_gym_keypoint \

--validate --seed 0 --deterministic

For training with your custom dataset, you can refer to Custom Dataset Training.

For more details, you can refer to Training setting part in getting_started.

Test¶

You can use the following command to test a model.

python tools/test.py ${CONFIG_FILE} ${CHECKPOINT_FILE} [optional arguments]

Example: test PoseC3D model on FineGYM dataset and dump the result to a pickle file.

python tools/test.py configs/skeleton/posec3d/slowonly_r50_u48_240e_gym_keypoint.py \

checkpoints/SOME_CHECKPOINT.pth --eval top_k_accuracy mean_class_accuracy \

--out result.pkl

For more details, you can refer to Test a dataset part in getting_started.

Citation¶

@misc{duan2021revisiting,

title={Revisiting Skeleton-based Action Recognition},

author={Haodong Duan and Yue Zhao and Kai Chen and Dian Shao and Dahua Lin and Bo Dai},

year={2021},

eprint={2104.13586},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

STGCN¶

Spatial temporal graph convolutional networks for skeleton-based action recognition

Abstract¶

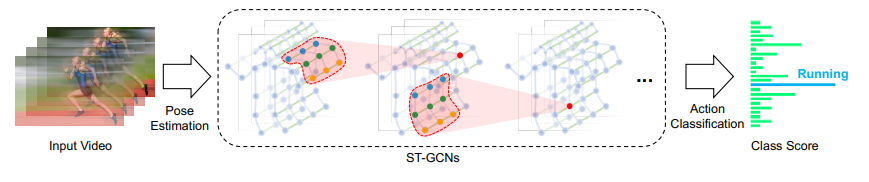

Dynamics of human body skeletons convey significant information for human action recognition. Conventional approaches for modeling skeletons usually rely on hand-crafted parts or traversal rules, thus resulting in limited expressive power and difficulties of generalization. In this work, we propose a novel model of dynamic skeletons called Spatial-Temporal Graph Convolutional Networks (ST-GCN), which moves beyond the limitations of previous methods by automatically learning both the spatial and temporal patterns from data. This formulation not only leads to greater expressive power but also stronger generalization capability. On two large datasets, Kinetics and NTU-RGBD, it achieves substantial improvements over mainstream methods.

Results and Models¶

NTU60_XSub¶

| config | keypoint | gpus | backbone | Top-1 | ckpt | log | json |

|---|---|---|---|---|---|---|---|

| stgcn_80e_ntu60_xsub_keypoint | 2d | 2 | STGCN | 86.91 | ckpt | log | json |

| stgcn_80e_ntu60_xsub_keypoint_3d | 3d | 1 | STGCN | 84.61 | ckpt | log | json |

BABEL¶

| config | gpus | backbone | Top-1 | Mean Top-1 | Top-1 Official (AGCN) | Mean Top-1 Official (AGCN) | ckpt | log |

|---|---|---|---|---|---|---|---|---|

| stgcn_80e_babel60 | 8 | ST-GCN | 42.39 | 28.28 | 41.14 | 24.46 | ckpt | log |

| stgcn_80e_babel60_wfl | 8 | ST-GCN | 40.31 | 29.79 | 33.41 | 30.42 | ckpt | log |

| stgcn_80e_babel120 | 8 | ST-GCN | 38.95 | 20.58 | 38.41 | 17.56 | ckpt | log |

| stgcn_80e_babel120_wfl | 8 | ST-GCN | 33.00 | 24.33 | 27.91 | 26.17* | ckpt | log |

* The number is copied from the paper, the performance of the released checkpoints for BABEL-120 is inferior.

Train¶

You can use the following command to train a model.

python tools/train.py ${CONFIG_FILE} [optional arguments]

Example: train STGCN model on NTU60 dataset in a deterministic option with periodic validation.

python tools/train.py configs/skeleton/stgcn/stgcn_80e_ntu60_xsub_keypoint.py \

--work-dir work_dirs/stgcn_80e_ntu60_xsub_keypoint \

--validate --seed 0 --deterministic

For more details, you can refer to Training setting part in getting_started.

Test¶

You can use the following command to test a model.

python tools/test.py ${CONFIG_FILE} ${CHECKPOINT_FILE} [optional arguments]

Example: test STGCN model on NTU60 dataset and dump the result to a pickle file.

python tools/test.py configs/skeleton/stgcn/stgcn_80e_ntu60_xsub_keypoint.py \

checkpoints/SOME_CHECKPOINT.pth --eval top_k_accuracy mean_class_accuracy \

--out result.pkl

For more details, you can refer to Test a dataset part in getting_started.

Citation¶

@inproceedings{yan2018spatial,

title={Spatial temporal graph convolutional networks for skeleton-based action recognition},

author={Yan, Sijie and Xiong, Yuanjun and Lin, Dahua},

booktitle={Thirty-second AAAI conference on artificial intelligence},

year={2018}

}